Leveraging the capabilities of multicore processors in robust, reliable, and certifiable ways.

By Steve DiCamillo, Technical Marketing and Business Development Manager at LDRA.

Single-core processors (SCPs) have traditionally been used for safety-critical applications, despite the benefits of multicore processors (MCPs). Those benefits include higher performance and lower power consumption and size, all of which make MCPs attractive for applications with size, weight, and power constraints. However, embedded software developers face unique challenges when dealing with timing and interference issues on heterogeneous multicore systems, particularly in safety-critical applications. The complexity of MCP platforms can make strict timing requirements extremely difficult to meet.

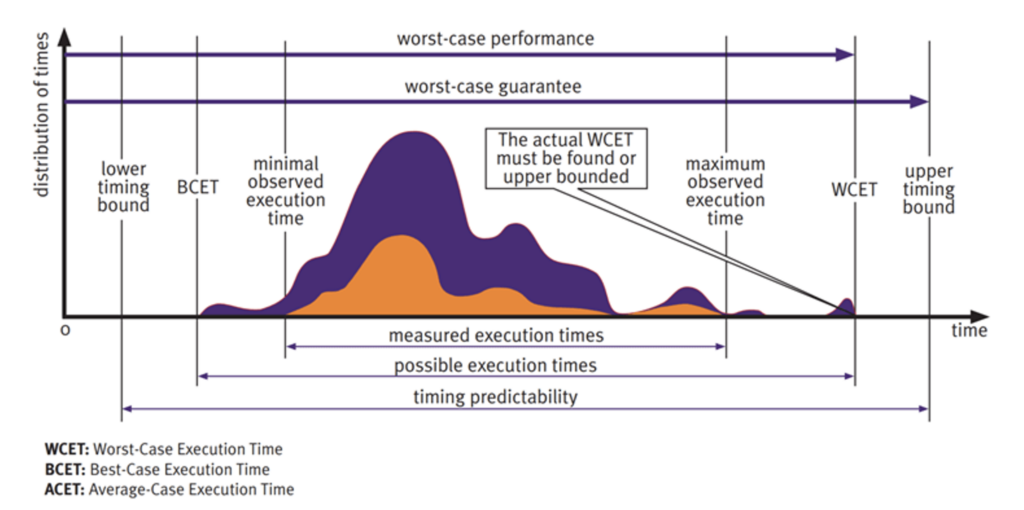

Because MCPs run multiple processes in parallel that share hardware resources, they introduce an extra level of development and testing complexity compared to SCPs. For MCPs, the task of finding a schedule of X tasks on Y processor cores such that all tasks meet their deadline has no efficient algorithm. Using an MCP, hardware interference can occur anywhere hardware is shared between processes. For example, layers of hierarchical memory are often shared so that interference is possible in those layers. These interference channels cause the execution-time distribution to spread. Instead of a tight peak, the distribution of execution times becomes wide with a long tail. This variability makes it difficult for developers to ensure precise timing for tasks, creating significant problems when they are building applications in which meeting individual task worst-case execution times is just as critical.

Where functional safety is paramount, this is critical.

Unlike developers working with single-core systems, developers of hard real-time systems on MCPs cannot rely on static approximation methods to generate usable approximations of best-case execution times (BCETs) and worst-case execution times (WCETs). Instead, they must rely on iterative tests and measurements to gain as much confidence as possible in understanding the timing characteristics of tasks.

The importance of understanding WCETs

In hard real-time systems, such as those that run mission- and safety-critical applications including advanced driver-assistance systems (ADAS) and automatic flight control systems, meeting strict timing requirements is essential for ensuring predictability and determinism. In contrast to soft real-time systems, where missing timing deadlines has less severe consequences, understanding the WCETs for hard real-time tasks is essential because missed deadlines can be catastrophic.

Developers must consider both the BCETs and the WCETs for each CPU task. The BCET represents the shortest execution time, while the WCET represents the longest execution time. Figure 1 illustrates how these values are determined given an example set of timing measurements.

Measuring the WCET is particularly important because it provides an upper bound on the task’s execution time, ensuring that critical tasks complete within the required time constraints.

In SCP setups, meeting upper timing bounds can be guaranteed so long as there is sufficient CPU capacity planned and maintained. In MCP-based systems, meeting these bounds is more difficult due to the lack of effective methods for calculating a guaranteed tasking schedule that accounts for multiple processes running in parallel across heterogeneous cores and use shared hardware between processor cores. Contention for the use of these hardware shared resources (HSRs) is largely unpredictable, disrupting the measurement of task timing.

Developers of hard real-time systems on MCPs must rely on iterative tests and measurements to gain as much confidence as possible in understanding the timing characteristics of tasks.

Understanding CAST- 32A and A(M)C 20-193

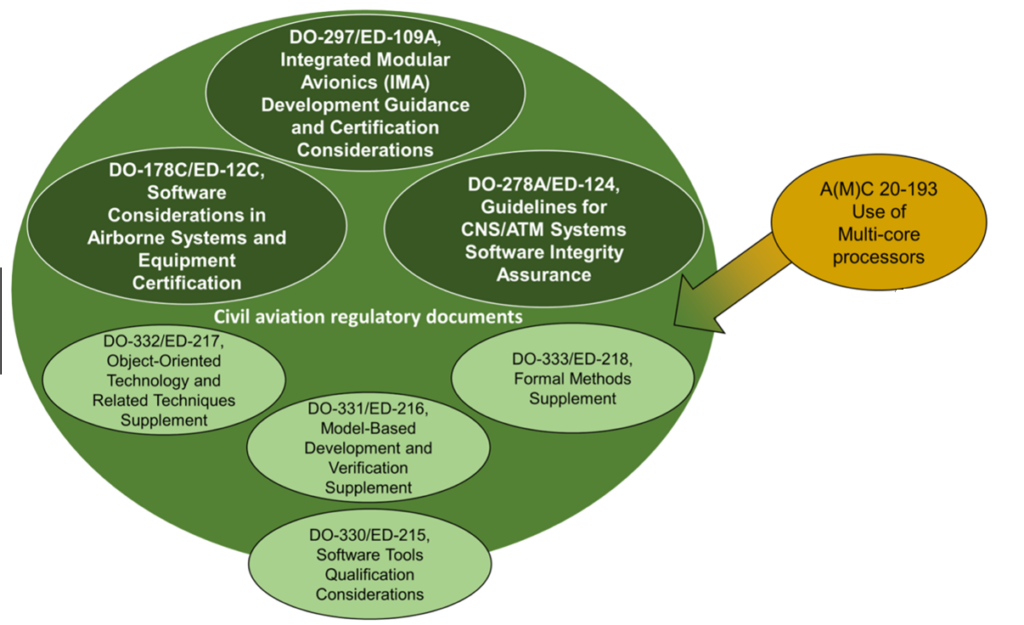

To address these challenges, embedded software developers can turn to guidance documents such as CAST-32A, AMC 20-193, and AC 20-193. In CAST-32A, the Certification Authorities Software Team (CAST) outlines important considerations for MCP timing and sets software development lifecycle (SDLC) objectives to better understand the behavior of a multicore system. While not prescriptive requirements, these objectives guide and support developers toward adhering to widely accepted standards such as DO-178C.

In Europe, the AMC 20-193 document has superseded and replaced CAST-32A, and the AC 20-193 document is expected to do the same in the U.S. These successor documents, collectively referred to as A(M)C 20-193, largely duplicate the principles outlined in CAST-32A.

To better understand the landscape of guidance available to developers, Figure 2 provides a visual representation of the relationships between key civil aviation documents.

A(M)C 20-193 places significant focus on developers providing evidence that the allocated resources of a system are sufficient to allow for WCETs. This evidence requires adapting development processes and tools to iteratively collect and analyze execution times in controlled ways that help developers optimize code throughout the lifecycle.

While A(M)C 20-193 does not specify exact methods for achieving their objectives, developers can employ various techniques, such as static analysis, for measuring timing and interference on MCP-based systems. Static analysis ensures that those measurements are truly representative of worst-case scenarios by allowing measurements to be focused upon the most demanding execution paths through the code.

Guidance for developers

A(M)C 20-193 specifies MCP timing and interference objectives for software planning through to verification that include the following:

• Documentation of MCP configuration settings throughout the project lifecycle, as the nature of software development and testing makes it likely that configurations will change. (MCP_Resource_Usage_1)

• Identification of and mitigation strategies for MCP interference channels to reduce the likelihood

of runtime issues. (MCP_ Resource_Usage_3)

• Ensuring MCP tasks have sufficient time to complete execution and adequate

resources are allocated when hosted in the final deployed configuration. (MCP_Software_1 and MCP_Resource_Usage_4)

• Exercising data and control coupling between software components during testing to demonstrate that their impacts are restricted to those intended by the design. (MCP_Software_2)

A(M)C 20-193 covers partitioning in time and space, allowing developers to determine WCETs and verify applications separately if they have verified that the MCP platform itself supports robust resource and time partitioning. Making use of these partitioning methods helps developers mitigate interference issues, but not all HSRs can be partitioned in this way. In either case, the likes of DO-178C require evidence of adequate resourcing.

How to analyze execution times on MCP platforms Multiple methods have been proven effective to meet the needs of WCET analysis and to meet the guidance of A(M)C 20-193 guidelines. Automated development tools can help developers implement these methods.

Halstead’s metrics and static analysis: Halstead’s complexity metrics can act as an early warning system for developers, providing insights into the complexity and resource demands of specific segments in code. For example, Halstead’s metrics reflect the implementation or expression of algorithms in different languages to evaluate the software module size, software complexity, and the data flow information—and these can be calculated precisely from the static analysis of the source code. Such an approach can identify which sections of code are the most demanding of processing time but cannot provide absolute values for maximum time elapsed.

The same static analysis also yields call diagrams, presenting a means of visualizing where the most demanding functions revealed by this analysis are exercised in the context of the whole code base.

These metrics and others shed light on timing-related aspects of code, such as module size, control flow structures, and data flow. Identifying sections with larger size, higher complexity, and intricate data flow patterns helps developers prioritize their efforts and fine-tune code segments that incur the highest demands on processing time. Optimizing these resource-intensive areas early in the lifecycle reduces the mitigation effort and risks of timing violations.

Empirical analysis of execution times: Measuring, analyzing, and tracking individual task execution times helps mitigate issues in modules that fail to meet timing objectives. Dynamic analysis is essential to this process, as it automates the measurement and reporting of task timings to free up developer workloads.

To ensure accuracy, three considerations must be taken into account as follows:

1. The analysis must occur in the actual environment where the application will run, eliminating the influence of configuration differences between development and production, such as compiler options, linker options, and hardware features.

2. Sufficient tests must be executed repeatedly to account for environmental and application variations between runs, ensuring reliable and consistent results.

3. Automation is highly recommended for executing sufficient tests within a reasonable timeframe and for eliminating the influence of relatively slower manual actions.

The LDRA tool suite employs a “wrapper” test harness to exercise modules on the target device to automate timing measurements. Developers can define specific components under test, whether at the function level, a subsystem of components, or the overall system. Additionally, they can specify CPU stress tests, like using the open-source Stress ng workload generator, to further improve confidence in the analysis results.

Analysis of application control and data coupling: Control and data coupling analysis play a crucial role in identifying task dependencies within applications. Through control coupling analysis, developers examine how the execution and data dependencies between tasks affect one another. The standards insist on these analyses not only to ensure that all couples have been exercised, but also because of their capacity to reveal potential problems.

The LDRA tool suite provides robust support for control and data coupling analyses. As illustrated in Figure 3, these analyses help developers identify critical sections of code requiring optimization or restructuring to improve the timing predictability and resource utilization of the application.

Conclusion

Ensuring timing requirements are met in hard real-time multicore systems is a complex undertaking. With numerous influences on task timing and analysis, development requires a disciplined approach to test setup and execution to leverage the capabilities of multicore processors in robust, reliable, and certifiable ways.

By understanding the A(M) C 20-193 guidance and analysis methods using appropriate automated tools, embedded software developers can better manage the complexities of hardware shared resource interference and address coding issues that impact it. This is essential in ensuring the efficient and deterministic execution of critical workloads on multicore platforms.